Abstract

We present a diffusion-based video editing framework, namely DiffusionAtlas, which can achieve both frame consistency and high fidelity in editing video object appearance. Despite the success in image editing, diffusion models still encounter significant hindrances when it comes to video editing due to the challenge of maintaining spatiotemporal consistency in the object's appearance across frames. On the other hand, atlas-based techniques allow propagating edits on the layered representations consistently back to frames. However, they often struggle to create editing effects that adhere correctly to the user-provided textual or visual conditions due to the limitation of editing the texture atlas on a fixed UV mapping field. Our method leverages a visual-textual diffusion model to edit objects directly on the atlases, ensuring coherent object identity across frames. We design a loss term with atlas-based constraints and leverage a pretrained text-driven diffusion model as pixel-wise guidance for refining shape distortions and correcting texture deviations. Qualitative and quantitative experiments show that our method outperforms state-of-the-art methods in achieving consistent high-fidelity video-object editing.

Method

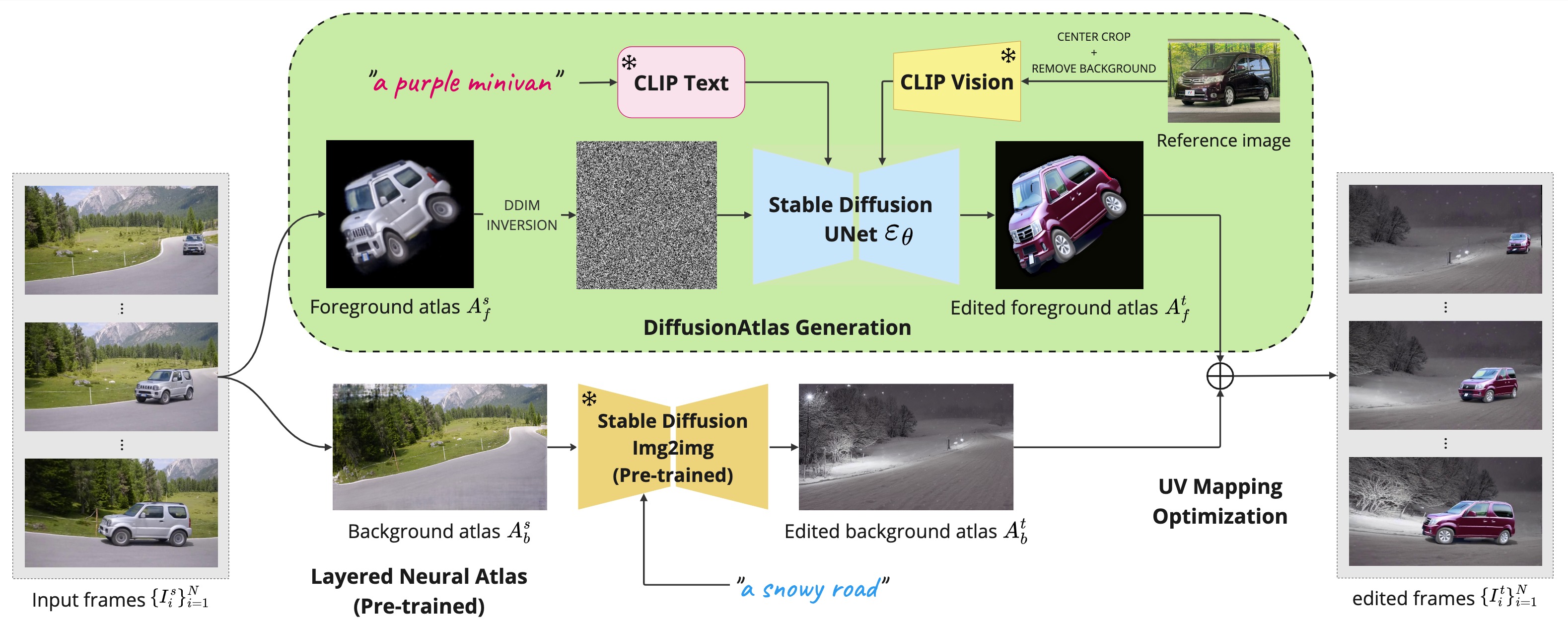

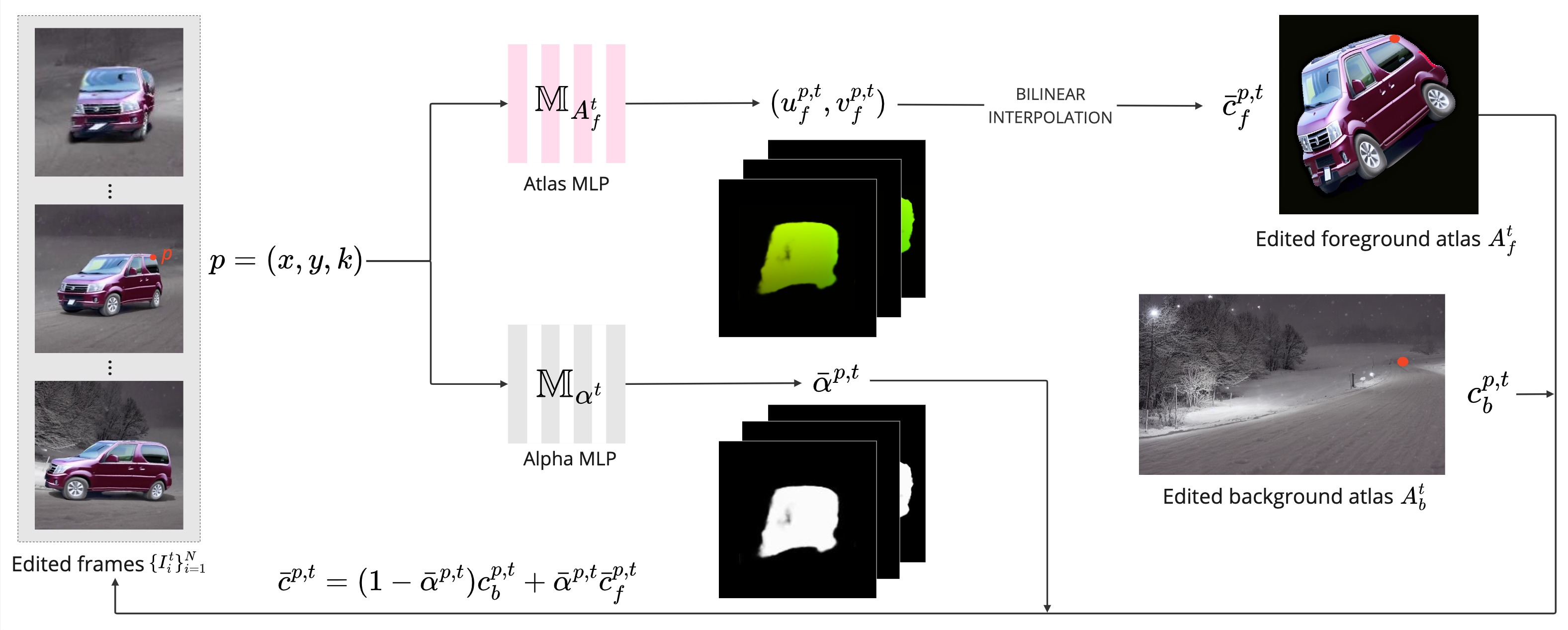

Given a source video, a text prompt, and a reference image (e.g., “a purple minivan”), our method enables consistent video editing with high-fidelity output. We introduce the one-shot tuning mechanism for diffusion models to update the attention blocks for the source structure and to edit the objects directly on atlas textures. After getting the edited foreground atlas, we optimize the UV mappings using constraints exploited from the original UV space structures and a pretrained diffusion model to propagate edited atlas textures back to the frames more naturally.

UV mapping optimization process.

Bibtex